In the prior blog post, I provided a checklist of some metrics that would help you assemble your defense if it was asserted that your database was the source of performance problems. But those ratios and indicators are just circumstantial evidence of probable innocence. Here comes the DNA test. It's hard. It's time consuming. It's complex. But the analytical effort just might help get you out of the hot seat and properly direct a performance issue to application or networking teams...

To perform the DNA test, you need transaction event monitor data and database snapshot data. In the very first blog post on May 28, 2007, class preparation instructions were provided. In these instructions, you hopefully executed two database snapshots 15 minutes apart concurrently with executing an event monitor for the same 15 minute period. It is important that the time periods match as close as possible because you will need to connect two otherwise disparate sources of performance data. If you haven't followed these instructions yet, now would be a good time to do so - but you don't need to transfer the event monitor table data to a laptop (you can work directly with the tables in your monitored database).

The Big Picture

We need to figure out, for a given time period, the average database transaction response time. A database transaction begins with the first SQL call and ends upon execution of a commit or rollback command. Generally, the database transaction response time mirrors the overall end user "end-to-end" response time. I readily acknowledge that some application processing and network time may occur before the first SQL call and after the commit or rollback. I my experience working with customers for the last 12 years, Database Transaction response time usually represents 95-98% of End-to-End User response time.

Within the scope of a database transaction, several time consuming events can occur. Time consumed by the database (that we can measure) includes:

- CPU time

- Sort time

- Lock time

- Bufferpool read I/O time

- Bufferpool write I/O time

- Direct read I/O time

- Direct write I/O time

The above times are often called "Inside DB2 Time". Transaction time can also be consumed outside of the database by:

- Network time

- Application processing time

The Basic Math

We begin by finding the average Database Transaction time for a given time period. From this average transaction time (TXAET), we subtract the CPU time, Sort Time, Lock Time, and I/O times (collectively the Inside DB2 Times). What is left over, the difference, if any, is time that the database cannot account for or does not own; this is Outside DB2 Time. To prove that your database is NOT GUILTY of degrading business performance, you want to see a high percentage of time Outside of the database.

Step 1: Determine TXAET, TXAVGCPU, TXAVGLOCK, and EVTTXCNT

Please note that the transaction event monitor reports CPU time consumed in microseconds, but, however, most other times in DB2 LUW are reported as milliseconds. For this exercise, we will convert all times to microseconds.

RUN the following SQL query against your transaction event monitor table:

select avg(bigint(timestampdiff(1,char(stop_time - uow_start_time)))) as TXAET, avg(user_cpu_time + system_cpu_time) as TXAVGCPU, avg(lock_wait_time)*1000 as TXAVGLOCK, count(*) as EVTTXCNT from classlab.xact_classlab;

The timestampdiff "1" computes the time difference between two timestamps in microseconds. If your SQL query errors out with a buffer overflow, try changing to timestampdiff "2" which finds the difference in seconds - then multiply seconds by 1,000,000. You can also try using FLOAT instead of BIGINT.

The average lock wait time is multiplied by 1,000 to convert from milliseconds to microseconds.

EVTTXCNT will tell us how many transactions completed while the event monitor was active.

Step 2: Compute SNAPTXCNT

Look at your second database snapshot, "dbsnap2.txt" Add together the number of Commit Statements Attempted plus Rollback Statements Attempted. This is SNAP2TXCNT.

Look at your first database snapshot, "dbsnap1.txt". Add together the number of Commit Statements Attempted plus Rollback Statements Attempted. This is SNAP1TXCNT.

Subtract SNAP1TXCNT from SNAP2TXCNT giving SNAPTXCNT.

If you followed the preparation instructions closely, the delta values from the two snapshots should sync up with the activity and time period reflected by the event monitor.

Your value for SNAPTXCNT should very closely match EVTTXCNT. If there is a difference of more than just a few transactions (ideally they should be equal), then your snapshot data and event monitor data is out of sync and you should start over with the preparation instructions.

Step 3: Compute TXAVGSORT, the average sort time, in microseconds, consumed by each transaction.

- From the second snapshot, dbsnap2.txt, find "Total sort time (ms)" as SNAP2SORTMS.

- From the first snapshot, dbsnap1.txt, find "Total sort time (ms)" as SNAP1SORTMS.

- Subtract SNAP1SORTMS from SNAP2SORTMS giving SNAPSORTMS.

- Multiply SNAPSORTMS by 1000 giving SNAPSORTMICRO.

- Divide SNAPSORTMICRO by SNAPTXCNT giving TXAVGSORT.

Step 4: Compute TXAVGBPRIO, the average bufferpool read I/O time, in microseconds, per transaction.

- From the second snapshot, dbsnap2.txt, find "Total buffer pool read time (milliseconds)" as SNAP2BPRIOMS.

- From the first snapshot, dbsnap1.txt, find "Total buffer pool read time (milliseconds)" as SNAP1BPRIOMS.

- Subtract SNAP1BPRIOMS from SNAP2BPRIOMS giving SNAPBPRIOMS.

- Multiply SNAPBPRIOMS by 1000 giving SNAPBPRIOMICRO.

- Divide SNAPBPRIOMICRO by SNAPTXCNT giving TXAVGBPRIO.

Step 5: Compute TXAVGBPWIO, the average bufferpool write I/O time, in microseconds, per transaction.

- From the second snapshot, dbsnap2.txt, find "Total buffer pool write time (milliseconds)" as SNAP2BPWIOMS.

- From the first snapshot, dbsnap1.txt, find "Total buffer pool write time (milliseconds)" as SNAP1BPWIOMS.

- Subtract SNAP1BPWIOMS from SNAP2BPWIOMS giving SNAPBPWIOMS.

- Multiply SNAPBPWIOMS by 1000 giving SNAPBPWIOMICRO.

- Divide SNAPBPWIOMICRO by SNAPTXCNT giving TXAVGBPWIO.

Step 6: Compute TXAVGDRIO, the average direct read I/O time, in microseconds, per transaction.

- From the second snapshot, dbsnap2.txt, find "Direct reads elapsed time (ms)" as SNAP2DRIOMS.

- From the first snapshot, dbsnap1.txt, find "Direct reads elapsed time (ms)" as SNAP1DRIOMS.

- Subtract SNAP1DRIOMS from SNAP2DRIOMS giving SNAPDRIOMS.

- Multiply SNAPDRIOMS by 1000 giving SNAPDRIOMICRO.

- Divide SNAPDRIOMICRO by SNAPTXCNT giving TXAVGDRIO.

Step 7: Compute TXAVGDWIO, the average direct write I/O time, in microseconds, per transaction.

- From the second snapshot, dbsnap2.txt, find "Direct write elapsed time (ms)" as SNAP2DWIOMS.

- From the first snapshot, dbsnap1.txt, find "Direct write elapsed time (ms)" as SNAP1DWIOMS.

- Subtract SNAP1DWIOMS from SNAP2DWIOMS giving SNAPDWIOMS.

- Multiply SNAPDWIOMS by 1000 giving SNAPDWIOMICRO.

- Divide SNAPDWIOMICRO by SNAPTXCNT giving TXAVGDWIO.

Step 8: Compute TXINSIDE, the amount of Inside DB2 Time (that we can reasonably account for) per transaction in microseconds

- TXINSIDE = TXAVGCPU + TXAVGLOCK + TXAVGSORT + TXAVGBPRIO + TXAVGBPWIO + TXAVGDRIO + TXAVGDWIO

Step 9: Compute TXOUTSIDE, the amount of Outside DB2 Time (that we cannot account for) per transaction in microseconds

- TXOUTSIDE = TXAET - TXINSIDE

Step 10: Compute INSIDEPCT, the percentage of time consumed by Inside DB2 Time.

- INSIDEPCT = TXINSIDE * 100 / TXAET

Step 11: Compute OUTSIDEPCT, the percentage of time not consumed by the DB2 database

- OUTSIDEPCT = TXOUTSIDE * 100 / TXAET

- CPUPCT = TXAVGCPU * 100 / TXINSIDE

- SORTPCT = TXAVGSORT * 100 / TXINSIDE

- LOCKPCT = TXAVGLOCK * 100 / TXINSIDE

- BPRIOPCT = TXAVGBPRIO * 100 / TXINSIDE

- BPWIOPCT = TXAVGBPWIO * 100 / TXINSIDE

- DRIOPCT = TXAVGDRIO * 100 / TXINSIDE

- DWIOPCT = TXAVGDWIO * 100 / TXINSIDE

Divide all of the computed average times by 1,000 to convert to milliseconds, or divide all of the computed average times by 1,000,000 to convert to seconds. Seconds seems to be the most popular scale amongst database professionals.

Step 20: Make it attractive for management

Congratulations! You made it to the end of the math! Now put all of your numbers into Excel or PowerPoint, or equivalent open source products, and create some pretty graphs for management. Everyone knows that management likes graphs.

DISCLAIMERS and IMPORTANT NOTES

Don't be alarmed if your Inside DB2 Time (TXINSIDE) adds up to more than, or is greater than, the Database Transaction Average Elapsed Time (TXAET). DB2 can consume CPU time, Sort time, and I/O time in parallel, so it is possible that the sum of inside time components can be greater than the database transaction response time. We love DB2 for its parallelism. Zoom zoom.

Because DB2 can consume time in parallel, the scale is potentially tipped in favor of high Inside DB2 Time. So, if you complete this analysis and find that your percentage of Inside DB2 Time is a relatively small percent (less than 30%), then you have a very strong DNA test argument in favor of "It's not your or your database's fault!"

If the percentage of Inside DB2 Time is high, then the DB2 database performance is at fault and the DBA truly does own the performance "opportunity for improvement". If this is the case, use the Inside Time percentages for CPU, Sort, Lock, and I/O to help determine where your resource bottleneck is. We'll cover next steps in future blog posts.

A Real World Example

I teach this class at IDUG, at various regional DB2 User groups, and on site at company locations. Last week at the Heart of America DB2 Users Group, one of the students had these values:

- Transaction Count: 2,985

- Transaction Average Elapsed time: 68,066

- Transaction CPU time: 31,892 - 46%

- Transaction Lock time: 0 - 0%

- Transaction Bufferpool Read I/O time: 9,842 - 14%

- Transaction Bufferpool Write I/O time: 2,038 - 3%

- Transaction Read & Write Direct I/O time: 1,227 - 2%

- Transaction Sort time: 5,258 - 8%

- Outside DB2 Time: 17,810 - 26%

- Transactions per Second: 2.8

All times shown in microseconds. Percentages are rounded. It is quite common for CPU time to be the highest resource consumer thanks to large bufferpools and hidden physical design flaws resulting in scans.

Frankly, now that you know how difficult this analysis is to do by hand and the steps involved, I hope you will search the internet to find a solution that automates all of this for you...

Now that you've searched Google and discovered that only DBI provides a solution for analyzing DB2 LUW transaction response times and service level attainments, please have a closer look.

DBI's Brother-Thoroughbred® was specifically designed to help DBA teams manage performance accountability, identify ownership of issues, accurately ascertain resource bottlenecks inside the database, and monitor achievement of Service Level Agreements (SLAs).

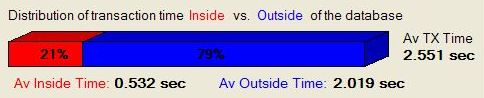

This Brother-Thoroughbred image shows the average database transaction response time, how much time is inside the database (owned by the DBA team), and how much time is outside the database.

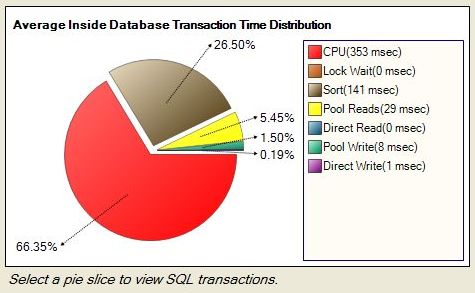

This Brother-Thoroughbred image shows the time distribution of time spent inside the database. The largest pie slice represents the primary resource bottleneck. Clicking on a pie slice will automatically take you to Brother-Panther's statement analysis sorted according to the resource bottleneck selected. Click, click, solved!

Just for Fun

I found a cool retirement calculator the other day and thought you might find it helpful. Check out: http://www.ing-usa.com/us/individuals/planningtools/calculatorstools/retirement/index.htm

Cheers,

Scott

Scott Hayes

President & CEO, DBI

IBM DB2 GOLD Consultant